Without creating and protecting the right infrastructure, autonomous systems cannot function reliably, leading to safety risks, inefficiencies, and public mistrust. Building this infrastructure requires collaboration between governments, private companies, and communities.

We invest in our Autonomous Systems because we believe in the claims that they reduce human errors, they are more efficient, they reduce cost, provide humans with services that they couldn’t reach before and finally they are more prestigious. We touched this concept in our previous article: Look for Autonomous Mindsets Not Autonomous Cars-I. In todays article we shall elaborate on this concept and then demystify the proven risks of turning our societies into autonomous cities where humans just play a limited role in the big scene.

Ready to transform your mindset? Click and Get your copy > Now For Sale on Simpleways.life & Amazon

Why we believe in the value added from Autonomous systems?

Naturally we tend to thrive, grow and expand. The media promises about the autonomous systems led by AI left us with big dreams about:

- Believing that human error is a leading cause of disasters. While Self-Driving Systems, theoretically, eliminate risks like distracted driving or fatigue – We shall debate this point in depth- . Also, autonomous algorithms minimize human emotional decision-making. Even though, this can be valuable for financial markets and stock trading, applying it on a global scale would reduce humanity in human cultures that is normally fast adapting.

- Hoping that autonomous systems are more efficient e.g. Self-Driving Systems optimize fuel consumption, reduce traffic congestion, and enable 24/7 operation without breaks. Moreover they allow following vast agricultural crops 365/24/7. But is that really required, to be in control 24/7 all year around?

- Enriching the probability of reducing costs to improve profits through eliminating not needed labor. Over time, Self-Driving Systems vehicles reduce labor costs (e.g., salaries of drivers). This applies also for Autonomous surveillance systems which reduces number of human security systems. Autonomous vehicles (AVs) and surveillance systems are susceptible to multiple forms of cyber attacks, such as: generating synthetic signals that mimic legitimate ones, misleading the system into incorrect interpretations of its environment.

- Autonomous systems are more prestigious. Cities like Dubai are competing to position themselves as futuristic hubs, attracting investment and tourism through advanced technologies. Competing with Hong Kong and London’s advanced algorithmic trading platforms, Singapore’s autonomous public transportation and traffic management systems even Hospitals showcasing robotic surgery capabilities.

- On the personal level, Autonomous cleaning robots and smart home systems helping elderly live independently, Self-Driving Systems transportation can serve populations unable to drive, such as the elderly or disabled, furthermore telemedicine robots allowing remote medical consultations for rural or mobility-impaired patients

BESTPRICEENDS-11FEB

Reality checks that reveal risks of Autonomous systems

The primary danger lies not in the existence of autonomous systems, but in our overconfidence in their infallibility.

1. The Claim: Machines Eliminate Human Errors

The argument often used to justify autonomous systems is that human errors—distracted driving, fatigue, or impaired judgment—are the leading cause of accidents. Proponents of automation claim:

- Machines do not get distracted or tired.

- Autonomous systems can process information faster and make decisions in milliseconds.

- AI learns from large datasets, making it more efficient over time.

Reality Check:

While these points hold some truth, they oversimplify the situation. Machines do eliminate certain human vulnerabilities but introduce their own categories of errors that can be far more catastrophic. Examples:

- Algorithmic complexity, A sophisticated computer worm manipulated industrial control systems in Iranian nuclear facilities. And, The Lion Air Flight 610 and Ethiopian Airlines Flight 302 crashes revealed how autonomous flight control systems (MCAS) can introduce catastrophic errors.

- Inherent programming biases, An autonomous hiring algorithm developed by Amazon (currently abundant) was found to be systematically discriminating against women. The AI learned and amplified existing gender biases from historical hiring data, potentially causing widespread, institutionalized discrimination

- Vulnerability to external manipulation, This recalls a 2021 incident when a threat actor remotely accessed the system and attempted to dramatically alter the chemical composition of drinking water for 15,000 residents by increasing sodium hydroxide levels by over 100 times.

- Lack of genuine contextual understanding, Computer algorithms trading at microsecond speeds created a feedback loop that caused the Dow Jones to drop 9% in minutes, far more rapid and destructive than human-driven market fluctuations. That was happened in the Flash Crash of May 6, 2010

BESTPRICEENDS-11FEB

2. The Nature of Machine Errors

Machine errors are fundamentally different from human errors:

- Complex Failures: A single error in a machine’s code or system design can propagate across the system, causing widespread failure.

- Example: A sensor malfunction in a self-driving car or an autonomous flight control systems (MCAS) might lead to it misidentifying the scenario. It is more complex with no human intervention to correct it.

- Security Risks: Machines are vulnerable to hacking, cyberattacks, and intentional sabotage.

- Example: A hacker taking control of an autonomous vehicle fleet could turn a city’s transportation system into chaos or a weapon.

- Unpredictable Edge Cases: Autonomous systems struggle with rare, unpredictable scenarios outside their training data.

- Example: A self-driving car may fail to recognize an unusual road obstruction, leading to an accident.

- Dependence on Infrastructure: Autonomous systems rely heavily on sensors, connectivity, and power. Failures in any of these components can result in system breakdowns.

- Example: Power outages or network failures could incapacitate autonomous fleets.

BESTPRICEENDS-11FEB

BESTPRICEENDS-11FEB

3. Comparing Human and Machine Errors

Human errors are often recoverable because:

- Humans can adapt and improvise in real-time.

- Individuals make isolated mistakes; they don’t typically propagate across systems.

- Errors in judgment are often mitigated by social dynamics (e.g., another driver correcting course).

Machine errors, however, are systemic and less forgiving:

- A single flaw can compromise an entire system.

- Failures are harder to detect and fix without halting operations entirely.

- Machine errors can be exploited maliciously on a larger scale.

Example: In a human-driven accident, one car is affected. In an autonomous system failure, hundreds of vehicles in a network could be impacted simultaneously.

BESTPRICEENDS-11FEB

4. Costs of Preventing Errors

Machine Error Prevention:

- Requires enormous budgets for R&D, testing, and system resilience.

- Demands continuous monitoring and updating to counteract evolving threats.

- Requires redundancy systems, which are costly and complex.

Human Error Mitigation:

- Involves training, awareness programs, and fostering responsible behavior.

- Encourages systems that empower humans with tools to make better decisions, rather than replacing them entirely.

- Supports mental and physical well-being to reduce stress and fatigue.

Key Insight: While machines may promise scalability and efficiency, the cost of preventing failures in autonomous systems far outweighs the cost of training and equipping humans to make fewer errors.

BESTPRICEENDS-11FEB

5. The Hidden Profit Motive

The push for automation often prioritizes profitability over resilience:

- Autonomous systems reduce labor costs and expedites mass production, creating significant profit margins for companies.

- Centralized control of autonomous systems consolidates power and influence, potentially enabling monopolies.

- The narrative of “machines are safer” is marketed aggressively, diverting attention from the inherent risks.

BESTPRICEENDS-11FEB

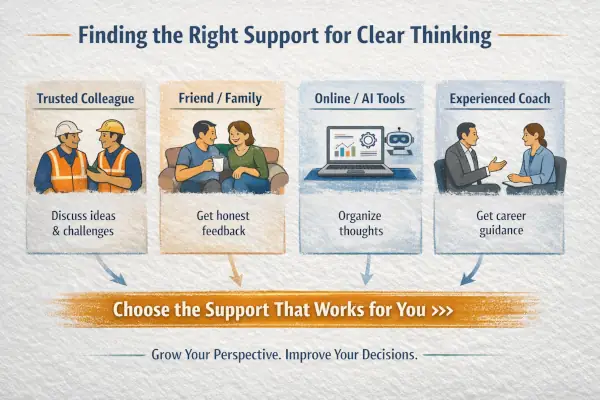

6. The Bigger Picture: Empowering Humans vs. Systems

- Supporting Human Autonomy:

- Investing in human skills, judgment, and resilience builds a society capable of handling crises without over-reliance on machines.

- Distributed human decision-making is inherently more robust against systemic failure.

- Balanced Approach:

- Machines should augment human abilities, not replace them. For example, driver-assist features in cars enhance safety without removing human control.

- Hybrid systems where machines handle repetitive tasks but humans oversee critical decisions strike the right balance.

BESTPRICEENDS-11FEB

7. The Real Danger of Relaxing into Automation

If we become too dependent on autonomous systems:

- Overconfidence: A false sense of security could lead to complacency in maintaining and monitoring these systems.

- Unpreparedness: People may lose the skills needed to act in emergencies, making society vulnerable to catastrophic failures.

- Ethical Implications: Decisions on safety, accountability, and risk become concentrated in the hands of a few corporations or developers.

BESTPRICEENDS-11FEB

BESTPRICEENDS-11FEB

Conclusion

Autonomous systems should not be a replacement for human autonomy but a tool to enhance it. While automation can reduce some human errors, the risks of systemic failure, malicious exploitation, and over-reliance on technology are real and significant. A more sustainable approach would focus on empowering humans to think, decide, and act with autonomy while using technology as a supportive partner rather than a replacement.

If you feel you need help with any of these ideas we discussed, request a Management Consultancy or Coaching Services From our Store